Leveling Up Your Work Through Architecture Design and Time Estimation

> This post can be useful for developers at any level! However, it is written mostly for entry-level developers who are starting to transition into a more intermediate role.

You’re rocking your first development job. You’re completing tasks, the team loves you, you still have tons of questions but you can get answers and build what you’re asked to build. But you want to keep improving your skills and giving yourself more options. Some of that skill can only come from actually building things over time. But surely there’s something you can do to level up besides that, right?

There is! One way to help deepen your understanding of the software you’re writing and make yourself look more impressive is by improving your ability to estimate your time, and how you talk about your work. That’s what we’re going to dig into today!

Some terminology

You might have heard of, or have read terms like “software architecture” and “quality attributes” and, upon trying to look into them, been met with a LOT of jargon and dense terminology. So before we get too far into this, let’s go over a few terms we’ll use. (This is by no means meant to be a complete description of these terms. The aim is to be clear enough to continue our discussion here, and let you get started.)

- Software architecture: This term means how your system is organized. It’s like the blueprint for the application or website you’re building. How do all the pieces we build fit together and interact with each other? What’s the main goal we’re trying to achieve with this codebase? That’s what this term is talking about.

- Software design: Once we have the blueprint, we need to figure out how to build the pieces to make that structure come to life. This is where the design comes in. It’s more code-specific and focuses more on the specifics of what we need to build and how. What languages are we using? How do we get this component to be interactive or usable like we want? This is all the design.

- Software quality attributes: Sometimes referred to as “ilities” because a lot of them end with those letters, these are words we use to describe the software we write. Common ones would be accessibility, reliability, or performance. These are words used to describe some of the key qualities we want our software to have. You can start with this list of quality attributes or do a search for “software quality attributes”, and find all sorts of articles covering a lot of the common ones.

- Time estimation: This is the skill of being able to consider a task and estimate how long it would take you to complete the task. Time can be a funny thing, and it’s pretty common to think something will be easy and have it take way longer, or think something will be complex, and it turns out to be straightforward.

- Trade-offs: This is the idea that two things can’t be equally balanced and/or important. There’s a joke about how people want things fast, cheap, and good and you can’t have all three. This is the same idea. Very often, by increasing one quality, you have to decrease another. The balance of those, and the choice to focus on one quality more than another, is called a trade-off. You’re trading the strength of one quality for another.

When we’re using the phrase “architecture design” in this article, we’re combining all these concepts together.

How do we get started?

Ok, so we’ve got some terms now. But what do we do with them? How do we get better at designing software, and estimating our time?

I’ve got two templates to share with you that can help you start to develop these skills. Both will involve writing, but I’ve tried to make them as straightforward to fill out as possible so it’s not a chore to use them. I’ll share links for the templates later on. But first, we’ll go over the actual contents of both of them and talk about how to use them.

These templates can be useful for any task, no matter how complex. However, super simple tasks like fixing a broken link on a page, or adjusting a color value to improve its contrast may not be the best choice for it.

It is useful to use this template on a task that you fully understand, so you get some practice with thinking about the tradeoffs you’re making, and get used to some of the terminology and how to talk about the software you build.

But this can really help when you start getting larger tasks or features- ones that might contain multiple parts working together or some complex logic to get the task working properly.

The architecture design template

For this template, the goal is to complete this first before you do any work on your task. Use this as a tool to help you think through your work before you get started on it so you can have a better understanding of what the task is asking of you, and let you start thinking about the attributes you’re building for.

There are 7 sections for this document, each with a title and description. Let’s go over each piece to see how they work.

Big picture: What’s the ask?

*What’s the ticket/task trying to solve? What’s the end goal of this body of work? Describe the problem in the most ELI5 way you can.*

Use this section to describe the task you’re working on. Act like you’re talking to someone with very little understanding of the situation, like a project manager on another team or to a friend of yours that doesn’t work with you. This section helps you make sure you have a strong handle on the work you’re being asked to do, and what the end result should be.

Requirements

*Is there acceptance criteria? A specific way it needs to work? What makes this ticket / task count as complete? Steps to reproduce and/or screenshots are helpful here too, if available.*

Some of this can often be copied over from the ticket you’re working on. Make note of how you can tell that this task is complete, and any specifics that you need to make sure work as expected when you’re ready to have someone review it.

Constraints

*Are there specific things you can / can’t use? Something you have to make sure doesn’t break? Tools you have to use because of where you have to work in the codebase?*

Making note of constraints can be super helpful. Maybe you have to use a specific UI library to complete this because the rest of the project uses it. Or perhaps this task has been attempted before, and you don’t want to repeat a version that didn’t work. Getting used to thinking about the constraints you’re working within can help prep your brain to think around these concepts.

Architecture and Tradeoffs

*Where does this code live? (login, ui, db, etc) What software quality attributes are we focused on for this task? List out the primary and secondary attributes (maybe a third if it feels necessary).*

In this section, we’re focusing on developing our architecture and design skills in detail. Talk a little about how the codebase is structured, and where the work for this task will be located.

Also, pick one or two software quality attributes that you think fit this task. Does the work you’re doing help improve the site’s overall accessibility? Does it make the application more reliable for end users? Does it help to keep our codebase’s maintainability within a reasonable level? Use one of the lists above or your own searches to build out a list of the attributes you might build for regularly, and pick out one or two of them that relate the most to this task.

Then, talk a little about why you picked those traits and your thoughts about why they’re applicable. Being able to explain your reasoning here will both help you gain more understanding of the work you’re doing, and the words you’re using to describe it, and give whoever reads this document a better glimpse into the work you do and how you think about it!

Initial Gameplan

*Considering all the above, what seems like the path forward? What are the steps for how you think it can be solved/completed? Where are you starting?*

Now that you’ve spent a little time thinking through the task, and some of the things you should remember about it (like how it’s structured, the tools you have to use, and what’s important to keep in mind while you’re solving it) - write out the steps you think you should take to accomplish the task.

It’s perfectly okay to not fully follow this plan as you start to build! We can never fully predict everything that might happen as we start to work in a codebase. The goal here is to give yourself a solid place to start, with all the details we’ve listed fresh in your mind.

Process Notes

*As you solve this - keep this area updated with rough notes. What did you try that didn’t work? Why not? If you have to pivot, what did you switch to and why? Maybe something worked but you still pivoted - what tradeoffs were made, and what’s the reasoning behind it?*

As the description implies, this space is all for you. Try to keep notes as you go. If you do have to change course from your game plan, why and what did you change? Did you find another problem you didn’t even know existed? Or maybe you were able to try a new concept out and it worked! Celebrate your process here. None of this needs to be in complete sentences or easy for anyone but you to understand. This space is all yours to help you keep thinking through the work as you’re doing it!

Final Solution

*You did it! Form a narrative. Now that you’ve got the issue solved, what does it look like? How did you do it? What did you learn along the way? Is the final solution the same as what you thought, or did the ask pivot along the way? Share screenshots if available.*

The final wrap-up! Try to write this similarly to how you started with the big picture. Did your initial plan end up working, and if not why? Were there any interesting plot twists throughout the process? Share the wins, the struggles, and the end result here. Screenshots can be perfect here to see a visual of your work!

I also typically leave a little space at the bottom to wrap up any lessons I learned for myself, and leave a little room to talk about my time estimate and reasonings behind how it turned out the way it did. But those are completely optional.

---

Because I use a Notion database to help me keep track of these documents, I also have a few properties I can fill out related to the project this document is for, what my time estimate and result were, and the quality attributes I selected. Having those visible at a glance is super helpful for me.

The biggest thing I’d recommend to track with this is the date you filled it out. Having that date to help you organize and find your documents is super helpful as your collection grows. I typically title mine based on the project it’s for and the name of the task, but you can name yours whatever you’d like!

Now let’s talk about how to track our time for this task.

The time estimate template

This one is a lot less detailed, but just as important! Being able to improve your knowledge of how long different tasks take you will be a super handy skill as your career continues.

The main idea with this template is to keep track of two sections: how long you think something will take you (the estimate), and how long it truly takes you. Our goal is to get those two sections to be as close to the same number as possible.

Remember that our goal here is NOT perfection. That’s impossible to reach. Our goal is simply to get them to be close to each other. Most people (even managers) understand that an estimate is not a guarantee. But the closer you can get your estimates to the true time it takes you, the more reliable you seem and the more accurately your team can make progress.

There are three main sections to this template.

The actual numbers

Most importantly, we want an estimated total time and an actual total time, as well as a calculation of the difference between those two numbers. Is our estimate higher, or is the actual total time higher? That difference is what we’re trying to keep as close to zero as possible.

I like to break my time tracking down into categories, so I can also see if particular parts of my work take me longer (or shorter) than I think. For each of these, I do the same thing: make an estimate, and record the actual value.

The categories I like to track are:

Investigation: time to fill out my architecture design, do a little looking into the task, make sure I understand fully what I’m being asked to do, and that I have all the information I need to complete it.

Coding: time to do the actual work. Most of my time calculation goes into this category.

Testing: I use this for either writing actual unit or end-to-end tests, or for manual testing. This is where I double-check to make sure I didn’t break anything or track time spent on that one last piece of functionality that doesn’t quite work right.

Review: any feedback I get on my Pull Requests or code reviews that require me to make changes go into this category.

Space to record the numbers as I go

The Pomodoro technique works really well for me with time tracking, though I change up my “working time” numbers to make them easier for me to calculate.

I’ve found the most straight forward way for me to do this is to have a title for each section of time that I’m tracking, with space underneath each one. Then, I have a legend of colored dot emojis related to a different amount of time: 15 minutes, 30 minutes, and 60 minutes.

Then, as I do my rounds of working time, each time I’m done with a round, I select the right colored dot for the amount of time I did, copy it, and paste it under the section the work I did belongs to. Keep repeating this until the work is complete!

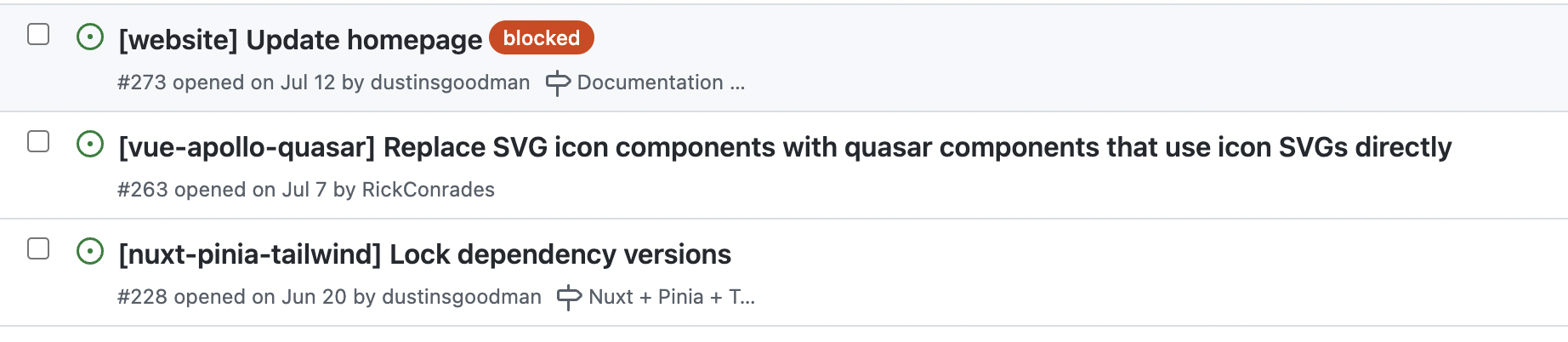

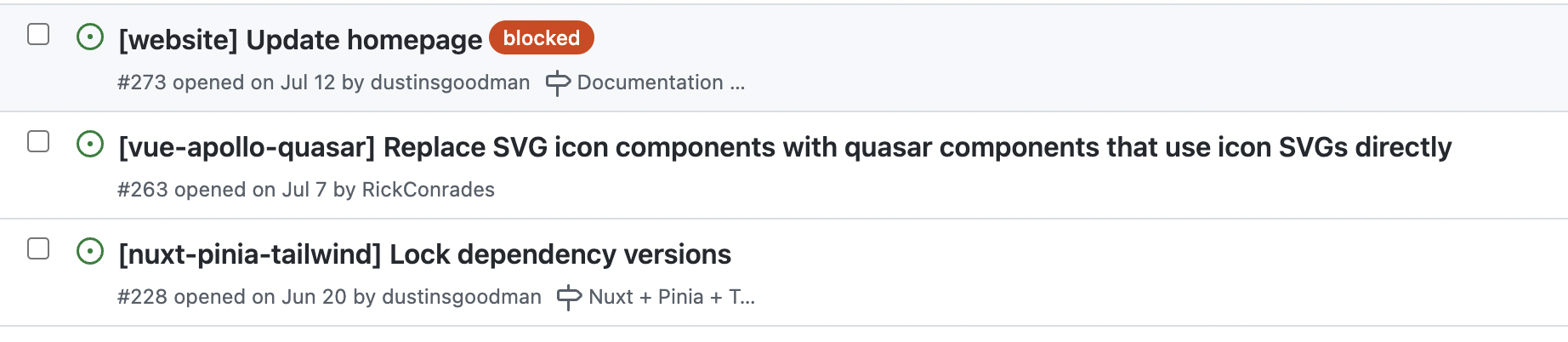

Once your task is done, all you need to do is count your dots, and record the total number they represent in your actual time section from above. Here’s a screenshot of what one of my completed sections look like, so you can better see what I mean.

From this tracking section, I can quickly see that I spent 15 minutes on both testing and review, and an hour and 45 minutes on coding. If I don’t happen to spend any time on a section (in this instance, I’d done some investigating in a separate ticket, so I already knew the work that needed to be done), I just leave it blank.

I have a section at the top of my page for tracking the total number for each category. So I use this area to keep track as I’m doing small rounds of work. Then, when I’m done, I add up each number and put it in the section at the top of the page for that category.

Notes

I also have a section for notes at the bottom of my document. I keep this area for anything relating to my time tracking. Did something break unexpectedly, causing my estimate to be off? Did I not need a section for some reason? Or did something work way better than I thought it would?

Those are the kinds of things worth keeping notes of here. Can you tell why your estimate and actual times were off? Sometimes we simply don’t know, but being able to keep notes on the things we can realize as we’re doing them helps us get better the next time!

Tying these together and talking about it with others

You can use both of these templates together or on their own, and you’ll gain a great amount of knowledge about your skill level and your growth over time from them! But they can also really shine using them together.

While these are great tools for your own personal knowledge and growth, I also highly recommend sharing them with someone else. It’s a great idea to set a goal to be better with estimating your time, and sharing that goal with your manager. Then, you can use the time estimation template to build up some estimates, and share those with your manager.

Maybe you have a senior developer on your team that you really respect, and you’d like to get their opinion on the task you’re working on. If you’ve filled out the architecture design for that task, you can share it with them and have a conversation about it. They can potentially provide you with things to consider for your next task, or get you thinking more deeply about the quality attributes you selected, and why they may or may not have been the best fit for your work.

The design documents are also great to share with your manager! It’s a great way to show that you’re starting to think about your work on a deeper level and starting to consider the quality and complexity of your work. It can also be helpful reference material for them when promotions and new work becomes available. They’re more likely to think you might be a good pick for the next big thing if you’re already showing them you’re starting to think about things at a higher level!

The Notion template links

The links for both of these documents as Notion templates are below. Please feel free to duplicate a copy for yourself if you like using Notion, or just take a peek at them to see the actual layout of them and adapt that for whatever tool works best for you.

These documents are set up to be used within a Notion database. We won’t cover that in detail here, but the Notion documentation should be able to take care of those details for you (and we have a link to get you started below as well). In general, you can create a database within Notion, and then set a template to use for each new entry. That way, when you go to create a new item, it will automatically pull up these templates for you, so you don’t have to copy and paste them every time! And the fields at the top will be visible when you go to look at your database, which makes it a little nicer to get a quick overview of your documents and the progress you’re making.

Now go forth and deepen your knowledge!

- Time estimation template

- Architecture design template

- Notion database templates documentation...